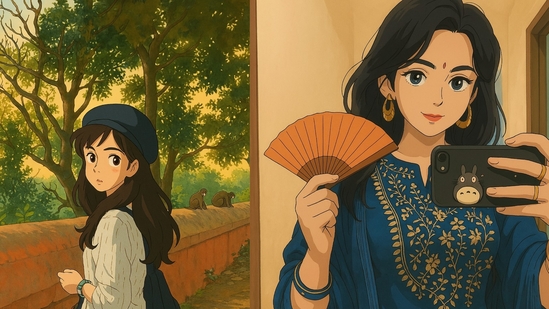

The rise of Artificial Intelligence (AI) has brought a wave of creativity to our digital lives. One trend that has taken social media by storm is the transformation of selfies into anime-style portraits inspired by Studio Ghibli. With just a few clicks, people are transforming their photos into charming Japanese-style illustrations.

Tools powered by AI, like ChatGPT and platforms such as Midjourney, have made this possible with just a few clicks. Instagram, Facebook, and X are now flooded with these whimsical avatars.

It may seem like harmless fun — but experts warn that behind the adorable filters lies a serious threat to your personal data.

Your Data for Their Output

Cyber expert Gautam Mengle warns that while users are busy enjoying AI features, they often overlook what they’re giving up in return — their personal data. “Whether it’s ChatGPT, Gemini, Grok, Midjourney, or other AI tools, the moment we log in using our email or social media accounts, we’re allowing them access to bits and pieces of our lives,” Mengle explains.

“Whether it’s ChatGPT, Gemini, Grok, Midjourney, or other AI tools, the moment we log in using our email or social media accounts, we’re allowing them access to bits and pieces of our lives,”

Gautam S Mengle, Assistant Vice President, CyberFrat

Every input you give — from selfies to text prompts — contributes to what’s called profiling.

Profiling is a process where AI builds a digital picture of who you are: your interests, travel patterns, eating habits, shopping preferences, and even your social behaviour. All of this is based on your online activity — from the posts you like and the articles you read, to what you enter in AI platforms.

Where Is This Data Stored?

Another important question no one’s asking enough: Where is all this data being stored — and how secure is it?

Mengle points out that major AI platforms have already experienced data breaches in the past, including ChatGPT. Once data is stored on servers, those servers become targets for hackers. And if breached, your personal information could fall into the wrong hands.

The Real Risks: Deepfakes, Identity Theft & Cybercrime

The more data you give AI tools, the higher the risk. Mengle warns that photos and personal information can be used to:

- Create deepfakes

- Generate fake IDs

- Build realistic but false online profiles

- Be sold to third parties or cybercriminals

This is why the threat from AI isn’t just theoretical — it’s real and growing.

The Rise of Malicious AI

There’s another layer of concern: AI clones. Mengle points out that once a powerful tool like ChatGPT hits the market, replicas quickly follow — not always created with good intentions.

“GhostGPT,” for example, was a clone created by malicious hackers to spread viruses.

The same AI that helps us can also be weaponized — raising urgent ethical and security questions.

So, What Can Users Do?

Before you upload that selfie or type your life story into an AI tool, here are few simple but crucial steps you can follow:

1. Read Privacy Policies: Always check whether an AI tool stores your data temporarily or long-term. Be aware of what you’re agreeing to before using it.

2. Remove Metadata (like location and time) from photos before uploading.

3. Think Before You Share: If you feel the platform could compromise your privacy, avoid sharing sensitive personal details. Decide consciously what you’re willing to reveal.

AI is powerful, fun, and full of promise — but with great power comes great responsibility. As users, we must stay informed, cautious, and proactive about our digital privacy. Because behind every cute anime filter could be a hidden data mine.